LLM4N3:Fixing a broken memory with LSTMs

Addressing limitations of RNNs, how LSTMs "remember" across long-range dependencies, and what's next?

Hey everyone. Welcome back to LLM4N: LLM For Noobs!

A 16-part series designed to take you from zero to one in understanding Large Language Models.

In the last few issues, we made a lot of progress. From simply counting words with N-grams to understanding their meaning with Word2Vec and processing them in order with Recurrent Neural Networks (RNNs). We gave our models a way to represent meaning and basic form of memory.

But we also ran into a serious problem. Our simple RNN, for all its cleverness, had a terrible memory. It was like Ghajini (forgive my bollywood movie ref). It was unable to connect information across long sentences. We called this the vanishing gradient problem, and it was like a game of “Chinese whispers” where the message (the learning signal) fades to nothing before it can be useful.

To truly build powerful language models, we need to fix this broken memory. We need an architecture that can not only remember but also selectively forget.

Today, we’re diving into the solution that did just that: The Long Short-Term Memory (LSTM) network.

Why we need long-term memory

To understand why LSTMs were such a breakthrough, let’s revisit the core problem. For a model to truly comprehend language, it must connect information across time.

Consider this sentence:

“I grew up in France, spending my summers exploring the countryside. After many years, I can speak fluent _____”

To correctly predict the final word, “French”, the model absolutely must remember the word “France” from the very beginning of the sentence. This is the challenge of long-term dependencies. To bridge the gap between a piecec of context and the point where that context is needed.

A simple RNN fails catastrophicall here. Its memory is like a single, small whiteboard. At every single word, the RNN takes in the new information, combines it with what’s on the whiteboard, erases the board, and writes a new summary.

By the time it gets to the end of the sentence, the crucial information about “France” has been overwritten and diluted so many times that it’s completely lost.

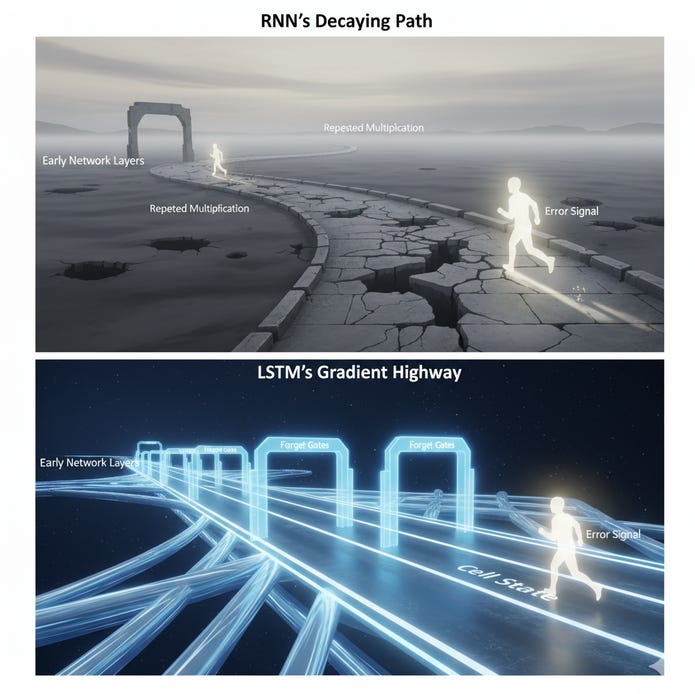

This is a mathematical flaw. During training, the error signal that says “you should have predicted ‘French’” has to travel all the way back to the word “france” to teach the model. In an RNN, this signal gets weaker and weaker with every step back, until it becomes zero.

The network never learns the long-range connection.

How do you build a system that can protect important information from being constantly overwritten?

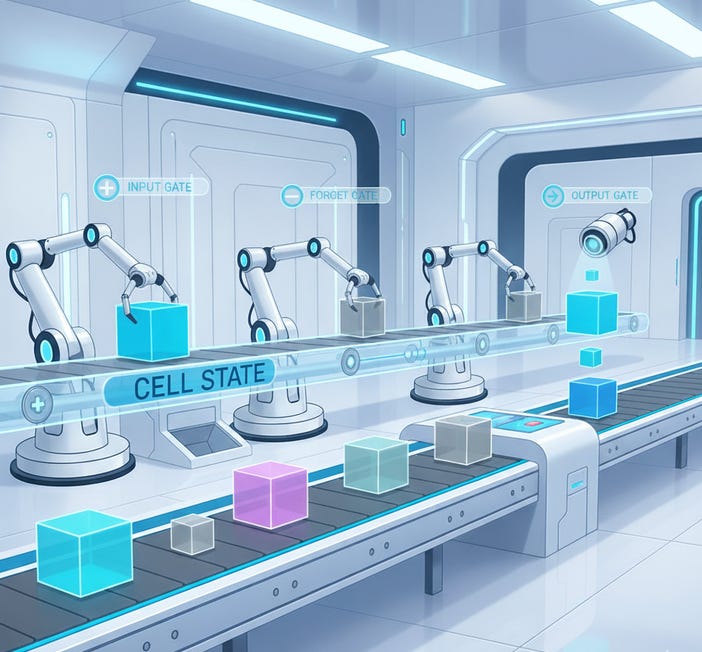

A conveyor belt for memory

The core innovation of the LSTM, introduce by Sepp Hochreiter and Jürgen Schmidhuber in 1997, is an architecture explicitly designed to remember information for long periods.

The most powerful analogy for an LSTM is to imagine its memory as an information conveyor belt that runs alongside the main production line. This belt, called the cell state, is designed to carry information straight through the entire process, from one end of a sentence to the other, with minimal changes.

Standing next to this conveyor belt are a series of intelligent, learnable gatekeepers.

These gates are tiny neural networks that act like valves, controlling the flow of information. They have the power to:

Forget: Look at the information on the belt and selectively remove things that are no longer needed.

Input(Write): Look at new information coming in and decide what, if any, is important enough to place onto the conveyor belt.

Output(Read): Look at the information currently on the belt and decide what part of it is useful for task at this exact moment.

This design brilliantly solves the “whiteboard problem”. Instead of one memory space that’s constantly being overwritten, LSTMs have a protected, persistent memory line that is only modified by these careful gatekeepers.

This allows the network to learn to hold onto a piece of information like “France” for hundreds of steps and then access it when it becomes relevant.

Dissecting an LSTM Cell

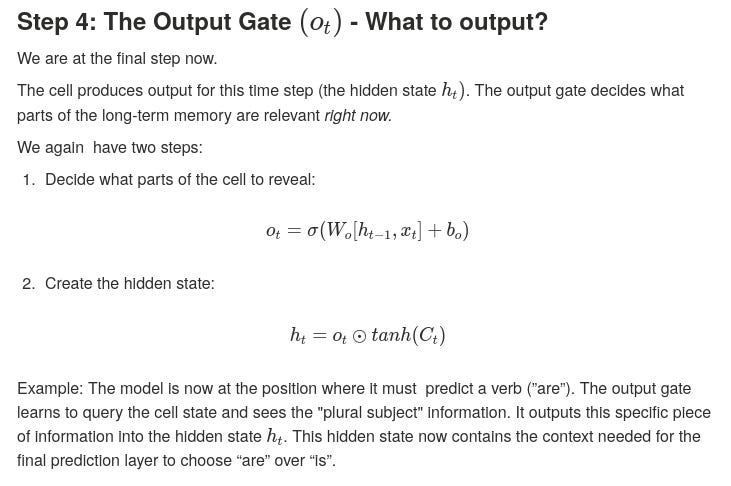

The true magic of the LSTM is its separation of long-term memory from short-term “working” memory. A simple RNN uses one vector (hidden state) for both roles, creating a bottleneck. The LSTM introduces two distinct pathways:

The Cell State (C_t): This is the conveyor belt. It represents the network’s long-term memory. It’s main job is to carry context across the sequence with as little disruption as possible.

The Hidden State (h_t): This is the output of the cell at the current time step. It serves as the “working memory” and is what the network uses to make a prediction or passes to the next layer. It’s a filtered, task-specific version of the long-term memory.

This segregation is incredibly powerful. The model can store a general fact like “the subject of the sentence is singular” in its cell state without that fact interfering with the processing of adjectives or adverbs. It can hold it in reserve until it sees a verb, at which point the output gate learns to release that information to ensure correct grammar.

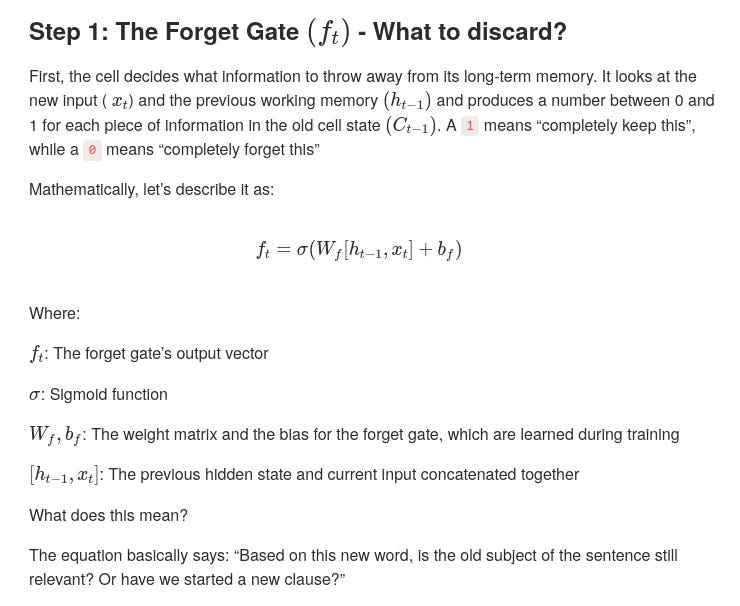

Okay Okay, enough theory. Let’s walk through how these pieces work together, step-by-step.

So...how does it work?

(Substack does not support in-line latex rendering, so please bear with me as I paste from my draft. Images may look distorted.)

Imagine an LSTM processing the sentence: “The cats …… they are sleeping.” The goal is to correctly predict the verb “are” instead of “is”. To do this, the model must remember that the subject, “cats”, is plural.

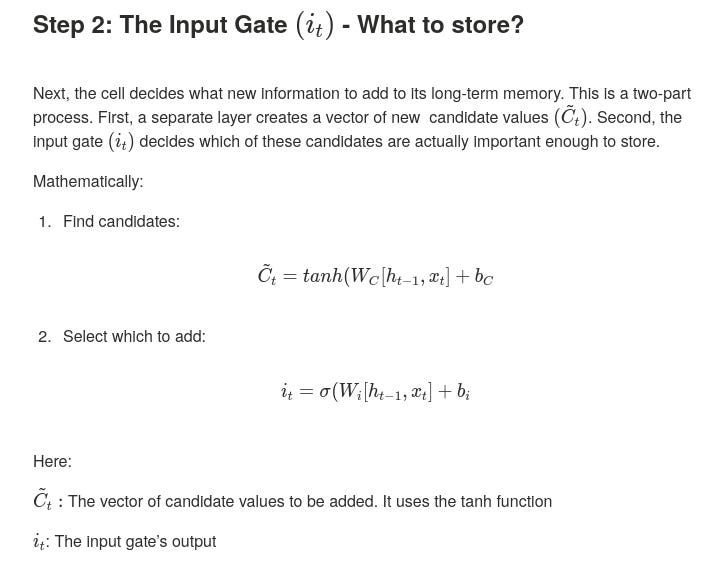

Example: When the model sees the word “Sleeping”, the tanh layer creates a candidate vector representing this new action. The input gate then decides this is important new information and outputs a 1, allowing it to be added to memory

Well...did we fix the gradient problem, yet?

That’s a fair question for a lot of the equations we described above.

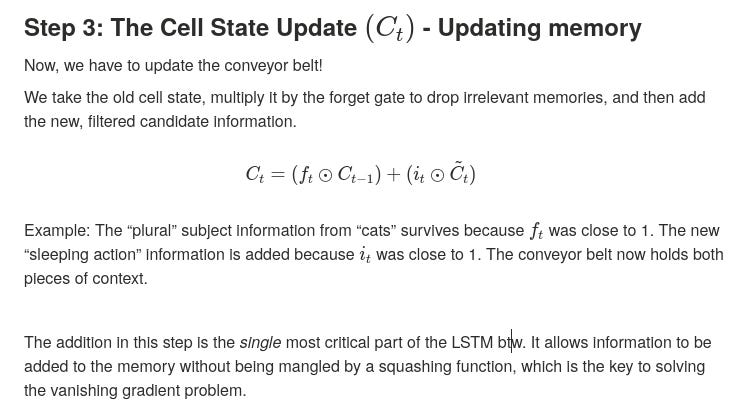

The solution to the vanishing gradient problem lies in the elegant design of the cell state update. During backpropagation, that simple addition from Step 3 becomes an “uninterrupted gradient highway”.

In a simple RNN, the gradient is repeatedly multiplied by a weight matrix and a squashing function’s derivative, causing it to shrink. In an LSTM, the main path for the gradient is through the cell state. Because of the additive update, the gradient flowing from C_t to C_{t-1} is multiplied directly by the forget gate vector.

This is the main idea.

The network can learn to preserve the gradeint signal by setting the forget gates to 1. The LSTM can literally learn to not forget the error signal.

This mechanism is what the original authors called the Constant Error Carousel CEC). It allwows the error to “ride” backward through time without decaying.

The forget gate doubles as a dynamic, learnable regulator for the gradient itself.

A simpler cousing: GRU

The success of the LSTM inspired researchers to ask: can we achieve this simpler design? The most popular variant is the Gated Recurrent Unit (GRU), introduced in 2014.

The GRU simplifies the architecture by:

Merging the cell state and hidden state into a single state vector.

Using only two gates (an Update Gate and a Reset Gate) instead of three.

In many tasks, GRUs perform comparably to LSTMs while being more computationally efficient and faster to train due to having fewer parameters. The standard practice is often to try a GRU first, and only move to an LSTM if more expressive power is needed.

We fixed the memory, so we’re done, right?

Not quite. LSTMs and GRUs were a monumental step forward. They fixed the memory problem and dominated sequence modeeling for years, enabling breakthroughs in machine translation, speech recognition, and more.

But they still have a fundamental bottleneck. They process information sequentially, word by word. To understand the last word of a sentence, they must have compressed all the information from the entire sentence into a single, fixed-size vector state.

This is like trying to summarize the entire Lord of the Rings trilogy in one single paragraph. You're bound to lose critical details.

What if a model could avoid this compression? What if, at every step, it could look back at all the previous words at once and decide which ones are most important for the current task?

How do you build a network that can shift its focus or attention?

That is the question that led to the next great revolution in AI and the birth of the Transformer architecture.

And with that, we finish the first phase of LLM4N.

We will dive into the world of transformers, dissecting every single detail and how they work from the next issue onwards!

Are you excited?! I sure am!

See you next friday!

Resources

Find awesome machine learning resources here.

If you’d rather read blogs, here is gold.